|

|

|

|

|

|

|

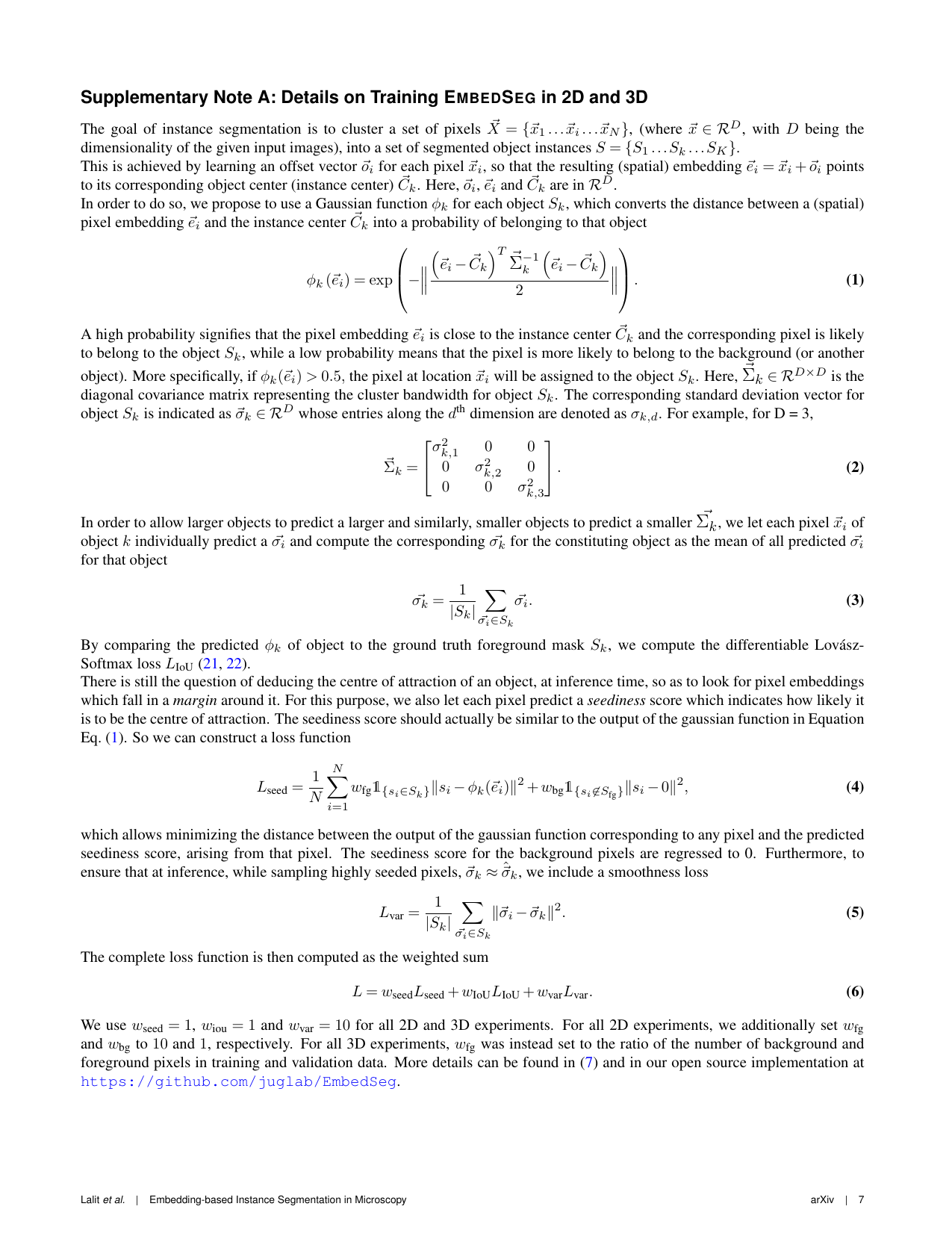

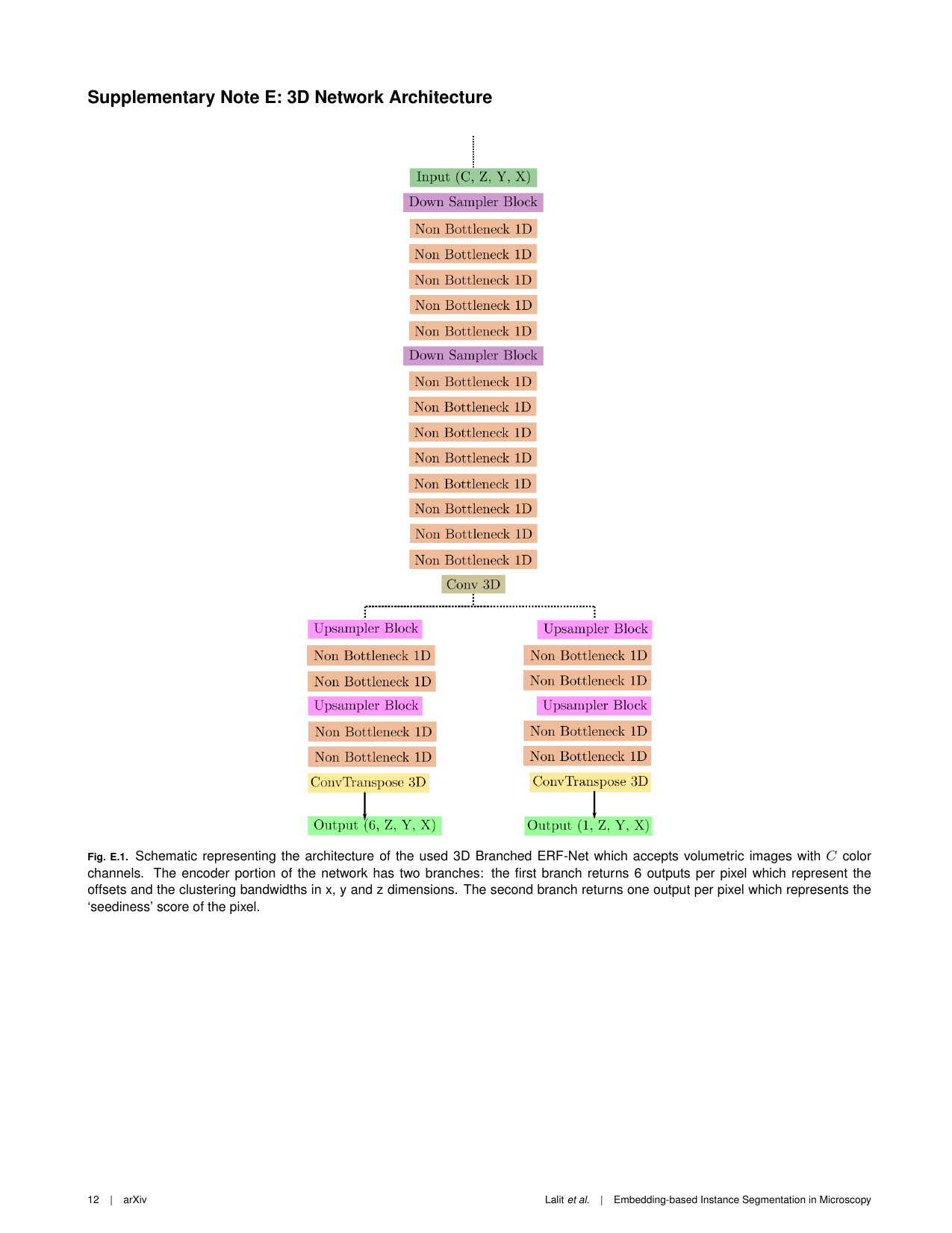

Automatic detection and segmentation of objects in 2D and 3D microscopy data is important for countless biomedical applications. In the natural image domain, spatial embedding-based instance segmentation methods are known to yield high-quality results, but their utility for segmenting microscopy data is currently little researched. Here we introduce EmbedSeg, an embedding-based instance segmentation method which outperforms existing state-of-the-art baselines on 2D as well as 3D microscopy datasets. Additionally, we show that EmbedSeg has a GPU memory footprint small enough to train even on laptop GPUs, making it accessible to virtually everyone. Finally, we introduce four new 3D microscopy datasets, which we make publicly available alongside ground truth training labels.

|

|

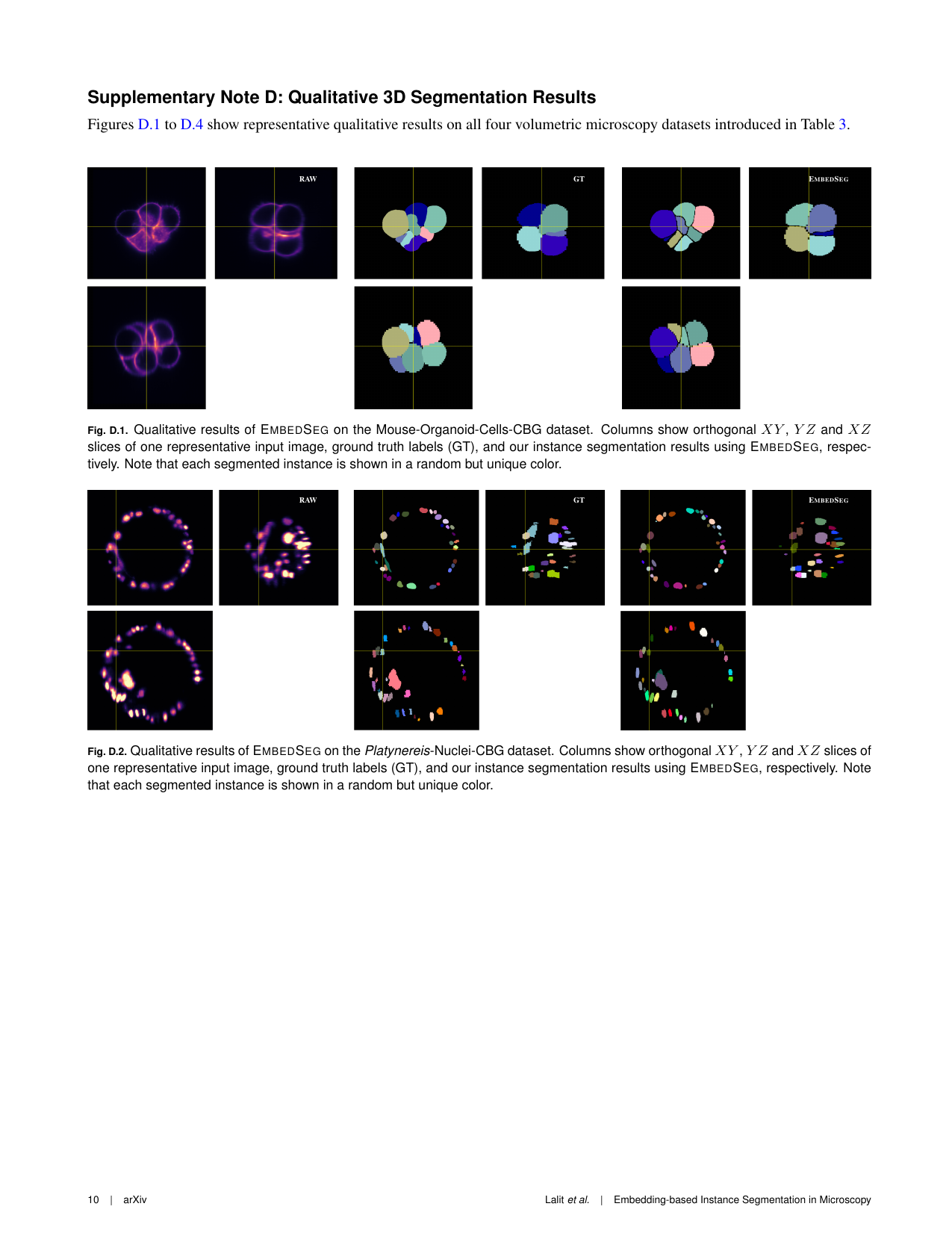

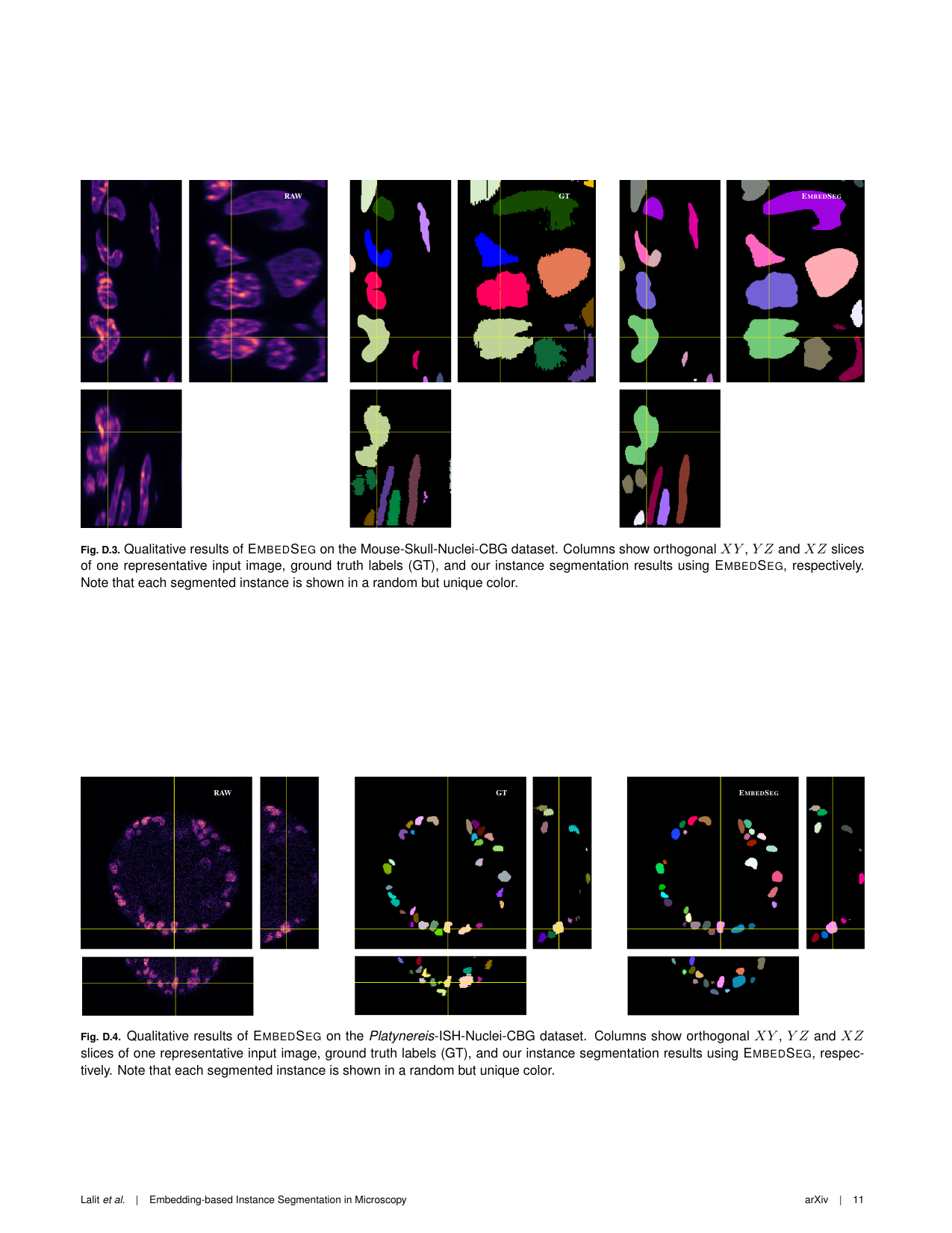

Qualitative results of

|

|

|

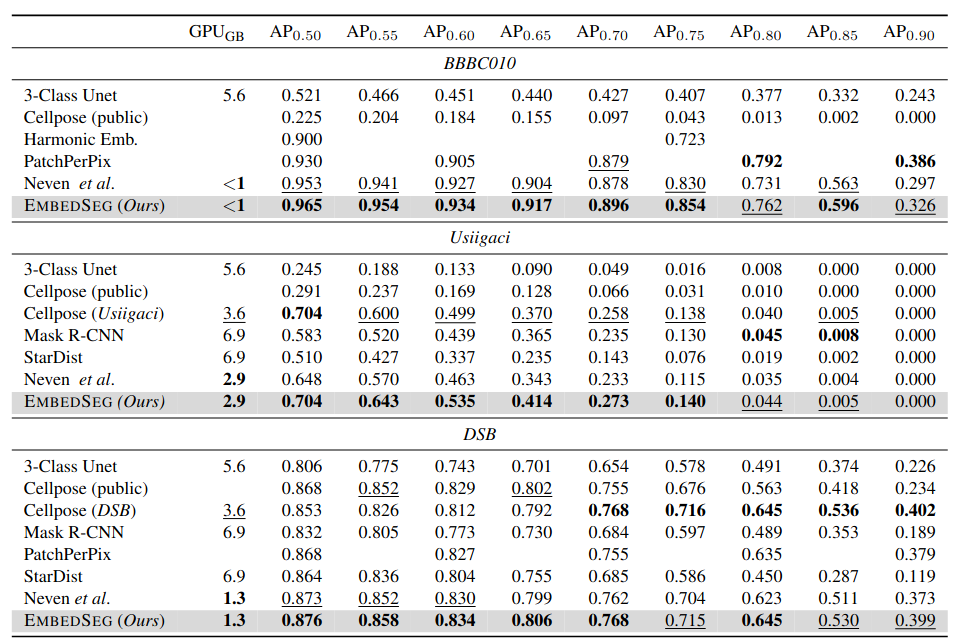

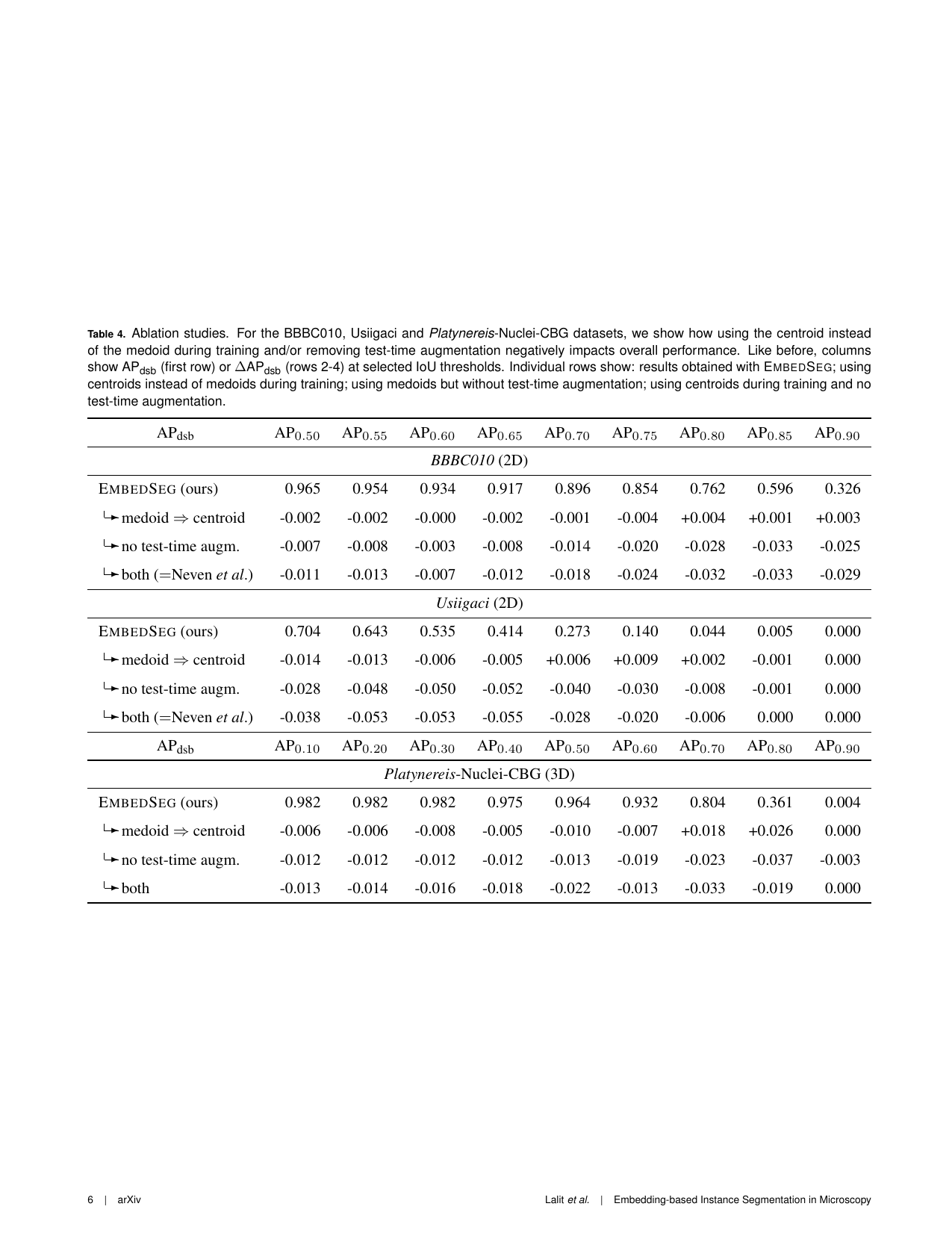

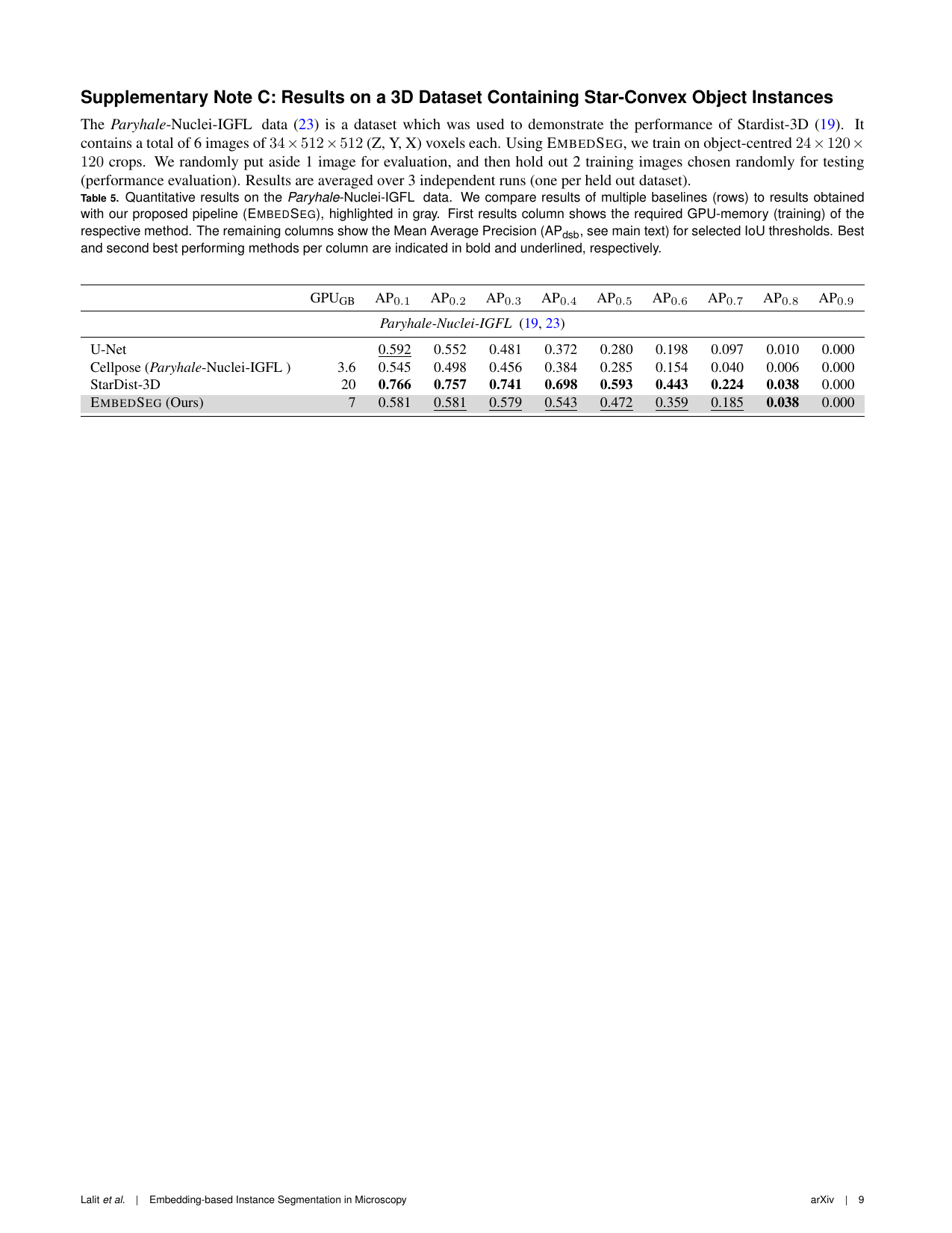

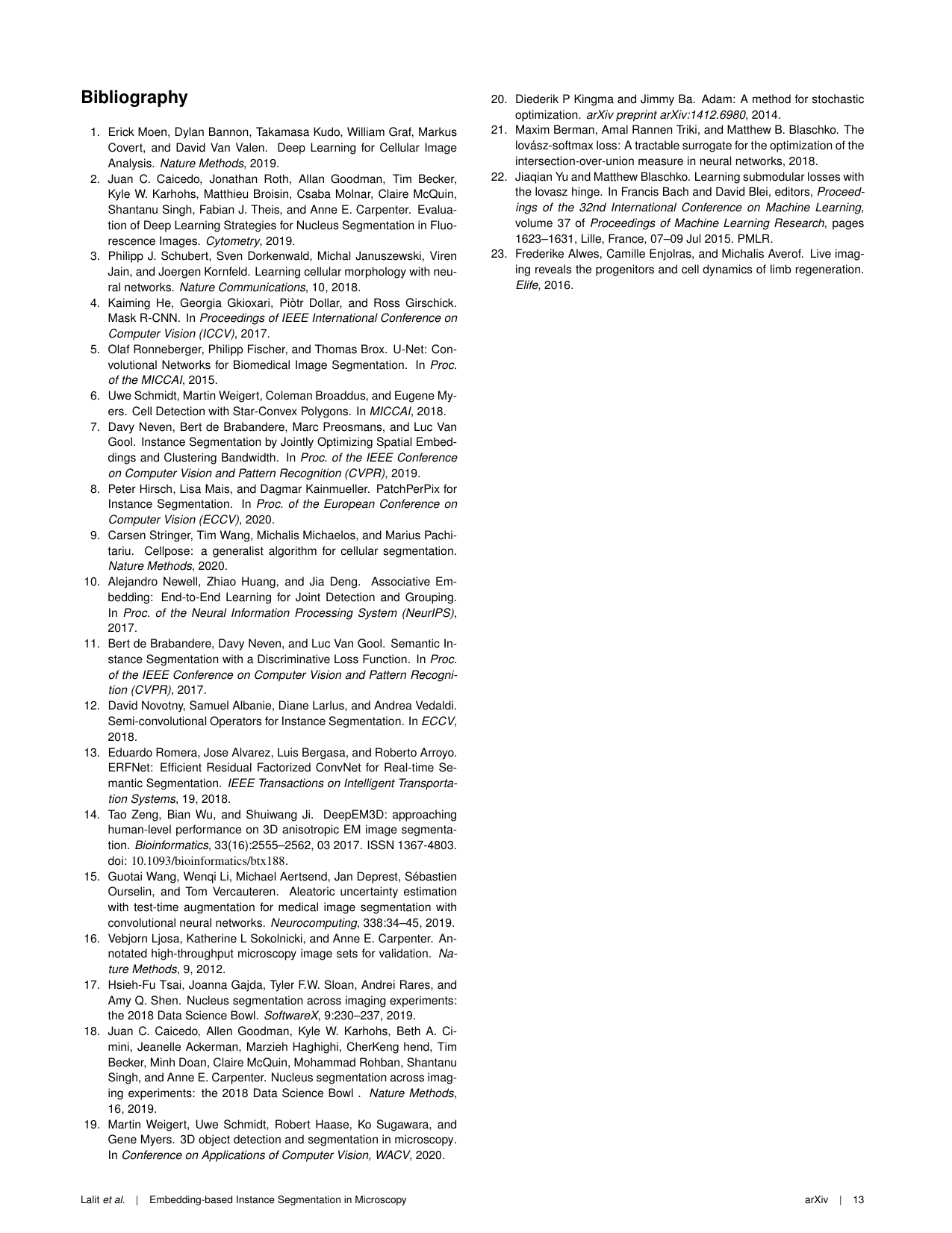

Quantitative Evaluation on three datasets. For each dataset, we compare results of multiple baselines (rows) to results obtained with our proposed pipeline (

|

|

| BBBC010 (mAP @ IOU50 = 95.2%) | DSB (mAP @ IOU50 = 87.7%) | Usiigaci (mAP @ IOU50 = 72.5%) |

Acknowledgements |